Bots are programs that have been coded to imitate a person’s behavior on the computer or to automate a task. While there are plenty of bots that are “good” and can be helpful for websites to use, there are “bad” bots that can be used by hackers or other less-than-ethical internet users. Bots like Click Bots (can fraudulently click on ads, which reports skewed data to advertisers), Download Bots (which skews engagement data for download numbers), or Imposter Bots (which attempt to bypass the security measures online) are all “bad” bots that you should protect your website against. So, how do you go about it?

How Can Htaccess Help?

CMS users use plugins to protect their sites against bad bots, but a much more effective and efficient way to protect your website is with a .htaccess file. By using a .htaccess file, you can control the traffic to your site at the server level. This takes away the need to load a site asset like JavaScript or CSS, and you don’t have to load PHP or the database. Basically, the entire process is simplified and streamlined.

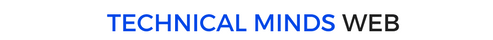

First, copy the below code and past it into your .htaccess file. What this does is acts as a control panel for your website to block any bad bots that might try and come your way.

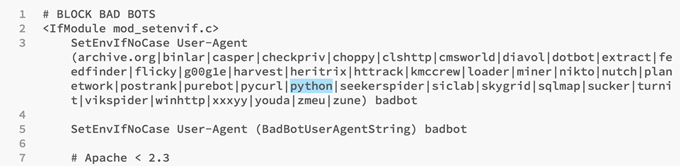

# BLOCK BAD BOTS

<IfModule mod_setenvif.c>

SetEnvIfNoCase User-Agent (archive.org|binlar|casper|checkpriv|choppy|clshttp|cmsworld|diavol|dotbot|extract|feedfinder|flicky|g00g1e|harvest|heritrix|httrack|kmccrew|loader|miner|nikto|nutch|planetwork|postrank|purebot|pycurl|python|seekerspider|siclab|skygrid|sqlmap|sucker|turnit|vikspider|winhttp|xxxyy|youda|zmeu|zune) badbot

SetEnvIfNoCase User-Agent (BadBotUserAgentString) badbot

# Apache < 2.3

<IfModule !mod_authz_core.c>

Order Allow,Deny

Allow from all

Deny from env=badbot

</IfModule>

# Apache >= 2.3

<IfModule mod_authz_core.c>

<RequireAll>

Require all Granted

Require not env badbot

</RequireAll>

</IfModule>

</IfModule>

This first highlighted section blocks some of the more infamous bad boots by using their user-agents.

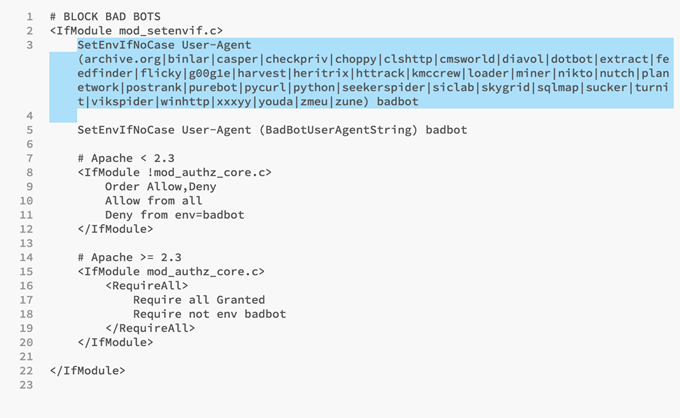

However, the next section is what actually does the heavy-lifting of the blocking.

For this code to work you don’t need to do any editing; all you have to do is save and upload the file and you’re all set to go!

The Technique in Action

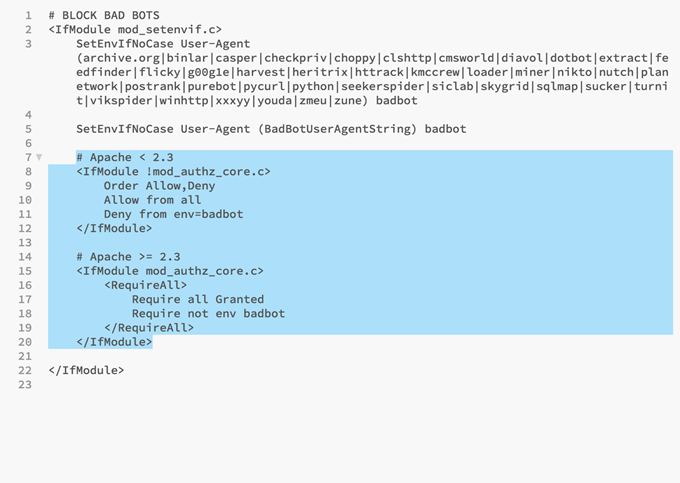

First, let’s trying accessing your website through a legitimate source, like Googlebot.

Go to this request maker tool, in the Base URL section type your website address, set the “Request Type” to GET, then click on Add Extra Headers button. The Header Field Name option with two boxes will appear, for the first box type Content-Type and for the second box type the Googlebot user-agent, which is Googlebot/2.1 (+http://www.google.com/bot.html)

Then, click on the Output Result Headers? Box, verify that you are not a Robot then click submit.

You will get a 200 Ok response from the headers, which the website is open to good bots, such as the GoogleBot that we tested it with.

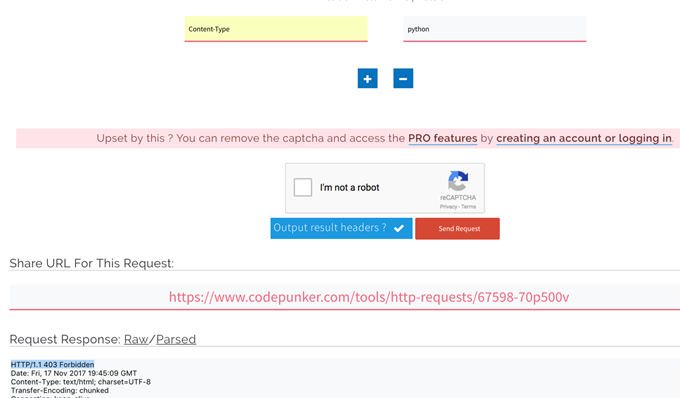

Now let’s do the same process with one of the bad bots that we blocked using the htaccess code to ensure that it works.

Step 1: Go back to your FTP client and copy a bad bot (we will use Python for this example).

Step 2: Following the same process as before but now replace the user-agent for Googlebot with Python. As you can see by the code “403 Forbidden,” the request for the bad bot has been blocked.

The Bottom Line

By using this technique, you can add as many “bad bots” with user-agent strings into the code in order to effectively block them all. At the end of the day, while plugins may seem more convenient, using the htaccess file is the most effective way to protect your website.

Have any questions or comments? Have you used htaccess before or do you prefer using plugins to block bad bots? Share your thoughts in the comments below!

About The Author:

Emin is a website designer at Amberd Web Design, based in Los Angeles, CA. On his free time, he enjoys writing tutorials and how-to guides to help others.